The Introduction of Data Analytics into Calibration

NEW DIGITAL OPPORTUNITIES IN THE CHEMICAL INDUSTRY

Executive Summary

Many companies in the Chemical industry see calibration as something that is time consuming and a drain on resources. Often calibration is done to ensure correct process batching and to avoid material waste. Another reason to calibrate is to ensure compliance with safety and environmental regulations. A considered calibration routine can improve measurement accuracies that in turn can lead to better process performance and tighter control limits, improved product quality, energy savings or even prevent environmental and safety issues.

In this article we explain how digitalization can be used to optimize calibration routines. We discuss a balanced approach which achieves cost-savings from increasing calibration intervals where possible, yet mitigates risk by decreasing intervals where absolutely necessary. Digitalization is a true source of opportunity for chemical manufacturers.

Authors:

Ian Nelson, Industry Manager Strategic Business, Endress+Hauser UK

Peter Gijbels, Global Portfolio Manager, Endress+Hauser Group Services

In the Chemical industry, instrument calibration increases measurement accuracy, which in turn increases production reliability. Making use of the calibration data provided by the calibration process can increase outputs, reduce costs, improve process safety and expand knowledge.

Calibration: The hidden opportunity

Primarily, digitalization can optimize calibration intervals, reducing cost and mitigating risk. However, it also gives confidence that processes are operating within tolerance. By eliminating inaccurate measurements, it assures product compliancy, reduces energy inefficiency or costly raw material loss, and improves other production KPIs. Additionally, accurate and consistent results from quality-critical devices ensure product quality. Optimization of calibration intervals can also improve process safety, mitigating risk to staff. This is always an important concern within the Chemical industry.

Opportunities for improved maintenance strategies can also be created, allowing a transition from corrective to condition-based monitoring, and preparation for future predictive maintenance. Predicting when a device will need maintenance would allow essential work to be planned around production schedules, improving the maintenance activities during planned shutdowns, and reducing unplanned shutdowns. Materials can be ordered in advance keeping the impact on the production process to a minimum, leading directly to higher availability and process performance.

There is a further, less-known side effect. An optimal calibration process, possibly combined with a condition-based monitoring system, can bring certainty that the root cause of drifting measured values is due to changed process conditions rather than instrument drift. Hence, well-performing instrumentation will detect changed process conditions.

Digitalization is already a familiar concept within chemicals production; however, it has so far only been applied to production rather than calibration data.

This whitepaper looks at how digitalization can be used to optimize calibration routines; it discusses a balanced approach which achieves cost-savings from increasing calibration intervals where possible, yet mitigates risk by decreasing intervals where absolutely necessary.

This approach employs a proven scientific method using unique artificial intelligence (AI) techniques, called Calibration Interval Optimization, or CIO.

Current calibration practices and problems

Many companies have a reactive approach, only calibrating when local regulations or quality requirements force them to it. Others may use arbitrarily fixed dates that fit in with planned maintenance schedules rather than being driven by data.

Yet incorrect adjustments after poorly-executed calibration can be worse than no calibration at all. This problem becomes particularly acute when manufacturers use third-party calibration companies just to obtain a certificate. Such companies typically adjust towards standards but do not allow for effects on the process. In one example, a company spent years in constantly making unnecessary adjustments to flow meters, due to a lack of instrument and application knowledge, resulting in poor batches.

Such problems can be avoided by using calibration companies with ISO 17025 or other accreditations, allowing them to deliver traceable results and therefore guaranteed performance.

Additionally, fixed calibration intervals that do not account for process conditions may not only increase cost; every interaction with a device introduces risk to the process. In the Chemical industry there is a tendency to prevent people accessing the field for unnecessary decommissioning and deinstallation of equipment. Risk of chemical product exposure and costly access requirements lead to a reduction in these actions. Calibrating only when needed will significantly reduce these risks and will certainly save costs.

How better calibration planning can improve production KPIs

By facilitating data-driven calibration interval optimization, digitalization achieves an optimal balance between cost-saving and risk mitigation. However, it can improve production KPIs such as Overall Equipment Effectiveness (OEE) as well.

Better calibration planning reduces unplanned downtime losses due to devices that are failing or have drifted out of tolerance (OOT); this improves availability. It also allows early detection of measurement drift, reducing corrective action time and effort, and improving performance.

Additionally, quality losses can be reduced, and quality improved, as calibration can reduce waste, non-conformities, and Out of Tolerances (OOTs).

All these factors have a positive impact on OEE levels, as shown in Fig.1 below.

Digitalization as a source of opportunity

Calibration intervals can be optimized if they are dynamic, and driven by data, rather than static and based on arbitrary reasons – but how do we obtain the necessary data?

An approach now possible is to feed any calibration interval calculation with process instrument data collected using Industrial Internet of Things (IIoT)-enabled measurement technology and digital applications. This process can be described by two key definitions:

These can be considered as steps in a larger process of digital transformation – the transformation of business, enabled by digitized content.

Here, we introduce some aspects of digitalization, showing its contribution to data-driven calibration interval decisions.

Many field instruments have digital communications capabilities; accordingly, their data can be collected by a local area network at the edge of an IIoT infrastructure. From here, it can be delivered to a Cloud server, and made available for analysis, and smart algorithms that can perform optimized calibration interval calculations.

Applications and information can also be delivered to technicians’ and managers’ mobile devices and PCs at any time, in any location. Digital twins of the actual field instruments, which are often difficult to access, can also be rendered in the cloud to give ready transparency to the measured data.

In many chemical plants, it is possible to open up such channels without impacting existing control systems. In any case, security can be preserved by ensuring that information is unidirectional, flowing only from the field instrumentation to the cloud. Accordingly, digitization can add value, and contribute to data-driven strategies, whilst reducing risks. Digitalization in turn will assure production processes and thus critical product deviations are recognized early enough to avoid costly interventions or even product recalls.

Applying digitalized data to calibration interval optimization

Many methods and recommendations for dynamic calibration interval optimization exist. International examples include ILAC G24, NCSLI-RP1, GAMP 5 appendix A, GMP 11 and the NASA Handbook, along with other publicly-available methods and proprietary solutions offered by instrumentation manufacturers.

While cost and risk must be balanced, most methods use a risk-based approach; this starts by defining the acceptable risk to derive the resultant cost, rather than vice-versa.

However, the definition of ‘acceptable risk’ of an instrument being Out Of Tolerance is not fixed across a chemical manufacturing plant. Instead, each instrument’s acceptable risk is related to the criticality of its measured value. We can accept more Out Of Tolerance risk for non-critical devices to help reduce calibration costs, while a highly critical device would warrant greater investment to secure a lower Out Of Tolerance risk. Accordingly, effective interval optimization depends on a proper definition of device criticality.

The definition of when a device is Out Of Tolerance is equally important. In these circumstances, the ‘Maximum Permissible Error’ or MPE indicates when the measurement deviation transgresses operational requirements. Effective interval optimization requires that MPEs should reflect operational requirements rather than just a device’s theoretical capabilities under ideal conditions.

In considering methods for interval optimization, the most holistic work to date is published by the National Conference of Standards Laboratories International (NCSLI) as their recommended practices RP1i. This categorizes interval optimization into two methods; reactive and statistical.

A good example of a reactive method is provided by the Good Automated Manufacturing Practice or GAMP5ii. However, this method suffers several disadvantages:

- When calibrations fail, interval reduction is not considered

- Method is reactive and does not provide any predictions

- The maximum interval is arbitrarily fixed at two years

- Only conformity status (pass/fail) is considered, not measurement error size

The NCLSI concludes that most reactive methods are, in general, less effective than statistical methods in terms of establishing intervals to meet reliability objectives.

Statistical methods rely on complex mathematics to determine so called “maximum likelihood estimations”. While these methods offer some improvements over reactive methods the NCLSI acknowledge they are “expensive to design and implement.” They typically require a large installed base and a significant amount of data to be feasible and/or cost-effective. However, they can work if they are reliable enough and bring comprehensive results. Additionally, their development has created a solid basis for creating more advanced methods that further maximize benefits and reduce drawbacks.

Advanced statistical methods

Examples of such calibration interval optimization methods include one that uses reliability models and another that employs Monte Carlo simulation. While their approaches are different, they share some common input factors. Both consider the drift detected during calibration, rather than simply whether the calibration passed or failed. They also relate the measurement error to the maximum permissible error (MPE); this reduces risk compared with GAMP 5 Appendix 1, which suggests increasing intervals after three con-secutive calibration passes – irrespective of how close the detected measurement error is to the MPE.

The metrological criticality of measured points, related to quality and performance, is also factored in, to ensure extra caution in managing highly critical devices. Other factors for consideration include device types and their susceptibility to drift.

An advantage of both methods is that they require very little or no data input. In fact, the first method can calculate new intervals based on one calibration result only. The second method can be used if at least two consecutive results are available.

Implementation practicalities

While these interval optimization approaches are data-driven, they also require a consultant with the necessary metrology expertise and experience to analyze and interpret results. Blindly following data-based recommendations is not enough, as there are too many factors that an algorithm cannot process, although a qualified human can.

For example, if the measurement error is close to the MPE any good method would suggest decreasing the interval. While the method is effective in highlighting that action is required to manage risk, interval decreasing may not be the best, or only, solution. Sometimes it makes more sense to replace the device, migrate to different technology, or simply clean the sensor.

Nevertheless, this approach can work for some smaller enterprises. Calculation results, plus expert interpretation may indicate a safe interval extension. Another calculation after the next calibration would indicate whether the interval was still suitable; the algorithm would provide early detection of any drift. Accordingly, the annual number of calibrations can be decreased while also mitigating risk.

Overcoming limitations

However, this approach is frequently unsuitable. Many chemical manufacturers with thousands of devices on site will not have time to review every interval after each calibration. For these cases, an alternative approach that takes advantage of data-driven optimization and its associated risk management can be used. It focuses on devices that are quality-critical or in high-risk areas.

At first, as part of the implementation project, optimal intervals are calculated for every device. As described previously, the calculated results must be reviewed and interpreted before new intervals can be set. This is the only time that change control is involved. Intervals are increased where possible to reduce operating costs, and intervals are decreased where necessary to manage risk.

During operations, optimal calibration intervals are still calculated for each device after every calibration. This is because the behavior of the devices is monitored, and drifts are detected early. Accordingly, operators can mitigate the risk of finding a device to be Out Of Tolerance at the next calibration.

At the review, only the devices for which a risk is detected, or are quality-critical or in high-risk areas need adjusting. For these, an intermediate work order for calibration or other necessary action is performed to avoid change control management. Nevertheless, after this additional calibration, an interval calculation is necessary. This confirms any possible drift and indicates whether the device can be calibrated at its initial frequency. Another indirect advantage of this alternative process is that all these detections and related actions can be documented and later used in change management procedures. Hence, using the collected outcomes from (for example) the previous three years rather than earlier experience can provide easier, data-driven change control.

Some tangible benefits of calibration interval optimization

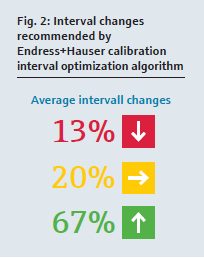

Ultimately, the value of calibration interval optimization depends entirely on how well it performs. Fig. 2 summarizes the results of all the calibration interval optimizations completed by Endress+Hauser to date. It shows the average interval changes suggested by their algorithm, in which only 20% of the analyzed instruments were set up with an optimal calibration interval. The vast majority of 80% should have intervals changed to achieve an optimum cost/risk balance.

13% of the instruments are recommended for more frequent calibration to manage risk. However, the majority of intervals can be significantly increased. The intervals for 67%, or two-thirds of all the instruments could safely be doubled or tripled. This leads to the conclusion that today most chemical manufacturers are calibrating excessively, with a huge potential for cost savings. These numbers are based on average results obtained from analysis of real customer datasets.

In one case, a manufacturer believed that they were calibrating too frequently. As they had never experienced any non-conformity problems, they felt that they could safely extend their intervals, but could not judge the extent possible. Increasing intervals randomly is risky, while conservative increases typically take years until savings become visible.

Instead, they decided to engage with Endress+Hauser and apply a calibration interval optimization process. Work to date suggests an ROI of 126% over the next five years. Benefits to the customer include not only lower direct calibration costs, but also reduced device de-installation and re-installation effort, along with less planning effort and fewer work permits needed.

The manufacturer decided to use the money previously spent on unnecessary calibration to maintain devices not formerly included in their maintenance schedule. This allowed them to improve the overall reliability of their plant, leading to other cost reductions. Additionally, due to reduced handling and build-out/build-in of instrumentation, they significantly reduced the risk of chemical product exposure, as well as saving costs.

Ongoing development: Predictive reliability

While we have shown the potential for calibration interval optimization to balance cost-saving against risk, further opportunities for improving productivity and reducing downtime through predictive maintenance are available; these can be facilitated through device embedded diagnostics.

One possibility is an approach called predictive reliability. This addresses the problem that, even with advanced calibration interval optimization, device status between two calibrations is invisible. Performing a calibration is the only certain way of knowing that a device is still within tolerance.

Accordingly, advanced technologies such as Heartbeat instruments – which provide advanced diagnostics, monitoring and verification capabilities – can provide diagnostic data for condition-based monitoring. Such a system can trigger an event when an Out Of Tolerance is likely occurred.

The data can be made available through IIoT infrastructures to cloud based artificial intelligence, which constantly monitors device metrological health status. Smart algorithms can also predict the instrument’s remaining useful life, or when it will run Out Of Tolerance.

This will give users early warning before the Out Of Tolerance takes place and sufficient time to schedule a preventative action which could for example be to bring calibration forward, clean or replace the sensor or whatever is necessary to avoid a non-conformity occurring. The process can be kept within tolerance, and required performance ensured, without compromising product quality or safety.

Note that this predictive approach is still under development, and not yet available as a solution. The Heartbeat technology, however, is already available for use as a condition-based monitoring tool.

Conclusion

The benefits of calibration interval optimization outweigh the risks, because it is not just about saving costs; instead, it establishes an optimization interval that reduces maintenance costs without creating risk of instruments going out of tolerance. Having an expert input will lead to better instrument usage and performance, which reduces costs while mitigating risk.

Better exploiting available data specifically for optimization reduces the complexity and the cost of its disclosure and conversion into knowledge. This address-es problems arising from reduced availability of knowledge workers.

It can also help to mitigate inappropriate or bad adjustments – and bad adjustments can be much worse than no calibration.

By using digitalization to facilitate data-driven calibration interval optimization, chemical manufacturers can contribute to their environment of smarter decision-making. This amplifies the intelligence that exists in the business, helping to increase outputs, increase process safety, reduce energy costs, improve processes, enhance skills, and expand knowledge.

With thanks to